Brokers

Definition

Intermediary platform when it comes to processing communication between two applications

Functions:

Decouple message publisher and consumer

Store the messages

Routing of messages

Monitoring and management of messages

Messaging

A way of exchanging messages from point A to point B or many points C.

Enables distributed communication that is loosely coupled.

A message sender sends a message to a destination, and the message recipient can retrieve the message from the destination. However, the sender and the receiver do not have to be available at the same time in order to communicate. (Example: email).

Example scenarios:

Send data to many applications without calling their API directly into your application

Want to do things in certain order like a transactional system

Monitor data feeds like the number of registrations in any application

The component which receives the message from the sender and recipient retrieves the message from a message broker or messaging middleware.

RabbitMQ

Messaging system modeled on the AMQP (Advanced Message Queuing Protocol) standard

Language-neutral and while using it you can write and read to them in any language just like you would while using TCP or HTTP

Redis

In-memory data structure store.

Used as a database, cache and message broker.

Supports data structures such as strings, hashes, lists, sets, sorted sets with range queries, bitmaps, hyperloglogs, geospatial indexes with radius queries and streams.

Has built-in replication, transactions and different levels of on-disk persistence, and provides high availability via Redis Sentinel and automatic partitioning with Redis Cluster.

Works with an in-memory dataset. Depending on your use case, you can persist it either by dumping the dataset to disk every once in a while, or by appending each command to a log.

You can use Redis from most programming languages out there.

RabbitMQ vs Redis

RabbitMQ

Pros

Most developers agree that RabbitMQ is the best message broker on the planet today. It can be configured to use SSL thereby providing additional layer of security.

The Interface, much more versatile when compared to Redis as a message broker.

Clustering and is very good at it.

Scales to around 500,000+ messages a second.

Cons

Needs Erlang

Minimal configuration that can be done through code. (Configuring RabbitMQ is something that must be done first before even implementing your task queue.)

Since our comparison is with Redis one can also say that for non extensive message brokers Redis obtains a slight edge due to its ability to multi-task.

Redis

Pros

Since it has dealings with a system's main memory Redis is fast and also scalable.

Extremely easy to set up, use and deploy.

Provides in-memory and advanced key-value cache.

Cons

Redis was created with a different intentions and not for being a message broker. It does support basic operations as a message broker however for powerful message routing Redis is not the preferred option.

High latency in dealing with large messages. Redis is better suited for small messages.

Personally I use Redis to implement very basic straight forward message queues because it doubles up as key value datastore but for huge and scalable applications with huge messages RabbitMQ is the way to go. As in most situations in life you can totally use a different message broker perhaps something like Kafka, ActiveMQ, ZeroMQ etc. A side by side comparison of them all is an article for another day

Kafka

Distributed streaming platform

A streaming platform has 3 key capabilities:

Publish and subscribe to streams of records, similar to a message queue or enterprise messaging system.

Store streams of records in a fault-tolerant durable way.

Process streams of records as they occur.

Generally used for 2 broad classes of applications:

Building real-time streaming data pipelines that reliably get data between systems or applications

Building real-time streaming applications that transform or react to the streams of data

To understand how Kafka does these things, let's dive in and explore Kafka's capabilities from the bottom up.

First a few concepts:

Kafka is run as a cluster on one or more servers that can span multiple datacenters.

The Kafka cluster stores streams of records in categories called topics.

Each record consists of a key, a value, and a timestamp.

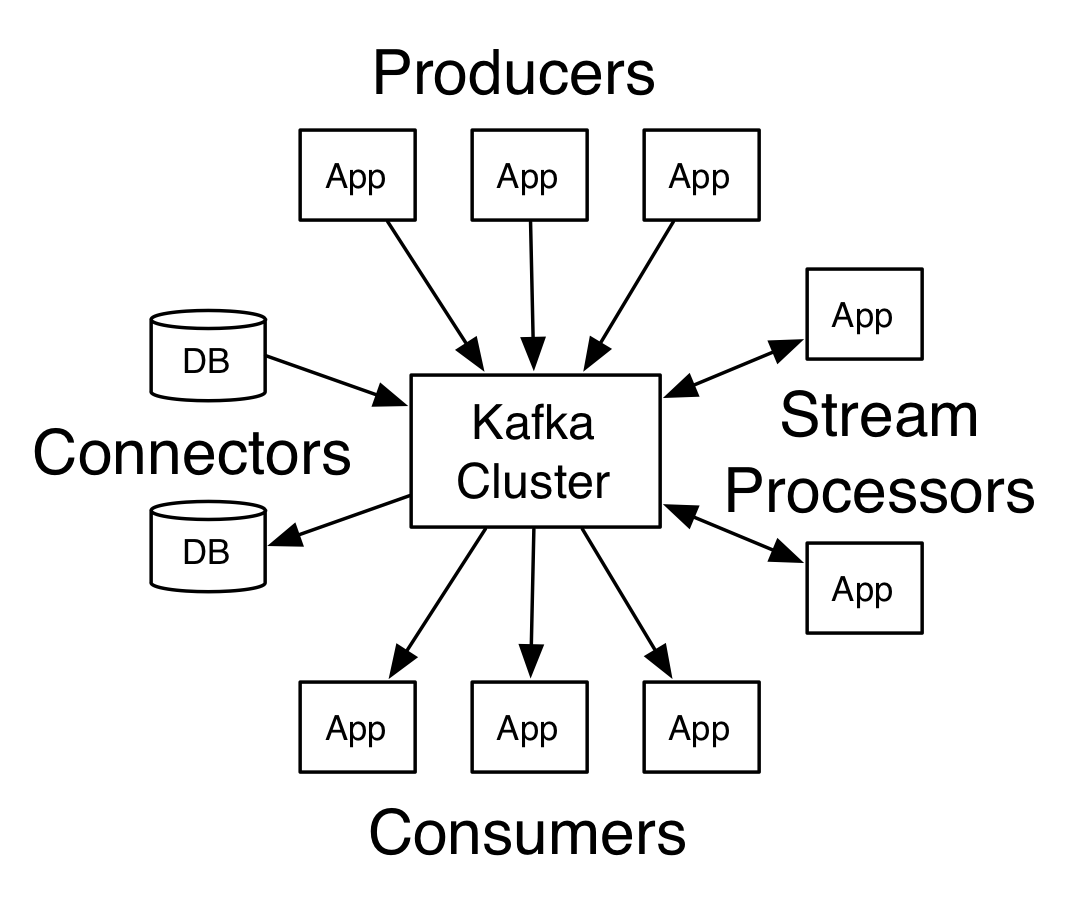

Kafka has 4 core APIs:

Producer API allows an application to publish a stream of records to one or more Kafka topics.

Consumer API allows an application to subscribe to one or more topics and process the stream of records produced to them.

Streams API allows an application to act as a stream processor, consuming an input stream from one or more topics and producing an output stream to one or more output topics, effectively transforming the input streams to output streams.

Connector API allows building and running reusable producers or consumers that connect Kafka topics to existing applications or data systems. For example, a connector to a relational database might capture every change to a table.

The communication between the clients and the servers is done with a simple, high-performance, language agnostic TCP protocol. This protocol is versioned and maintains backwards compatibility with older version. We provide a Java client for Kafka, but clients are available in many languages.

Last updated